| uCoz Community For Webmasters Site Promotion Indexing Policy & Robots.txt |

| Indexing Policy & Robots.txt |

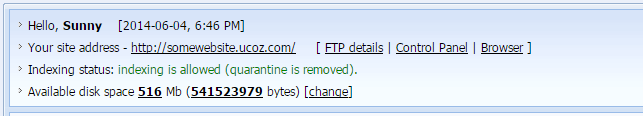

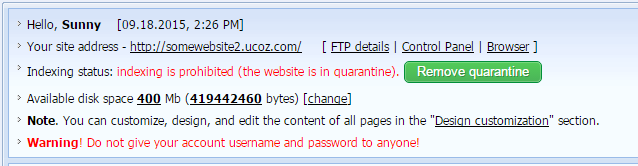

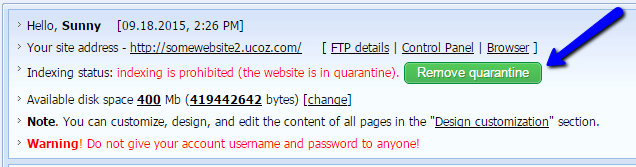

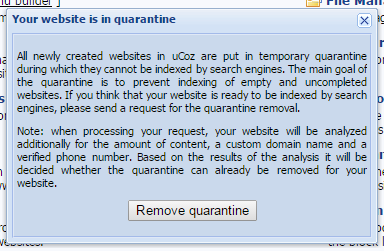

Website's Indexing Status All uCoz websites have Indexing status that is displayed at the top of the Control Panel's main page (/panel/?a=cp). The parameter shows whether indexing by search engines is allowed for the website or not (whether the website is in quarantine). The indexing status can show one of the two options: "indexing is allowed (quarantine is removed)":  Or "indexing is prohibited (the website is in quarantine)":  The status "indexing is prohibited (the website is in quarantine)" is assigned by default to all newly created websites. Quarantine Removal Policy A website can become available for indexing either automatically (if a premium plan is purchased) or upon the website owner's request. If the website does not have a premium plan and the user wants the quarantine to be removed, a request should be submitted from the website's Control Panel:  There will be a pop-up window with the info on the quarantine policy:  After the request has been submitted, the website will be checked automatically according to a number criteria: the website's age, presence of a custom domain name, content, verified phone number etc. On the basis of these criteria the system decides whether the quarantine should be removed. We cannot provide a more detailed description of the algorithm. Note! If the quarantine removal was denied, the next request can be submitted no sooner than in 7 days. Robots.txt A website's robots.txt file is located at http://your_website_address/robots.txt. A website with the default robots.txt is indexed in the best possible way – we set up the file in such a way that only pages with content are indexed, and not all existing pages (e.g. login or registration page). Therefore uCoz websites are indexed better and get higher priority in comparison with other sites where all unnecessary pages are indexed. That's why we strongly recommend not to replace the default robots.txt by your own. If you still want to replace the file by your own, create a text file using Notepad or any other text editor and name it "robots.txt". Then upload it to the root folder of your website via File Manager or FTP. Note: while website indexing is prohibited, no modification of the robots.txt file is possible. The default robots.txt looks as follows: Quote User-agent: * Allow: /*?page Allow: /*?ref= Allow: /stat/dspixel Disallow: /*? Disallow: /stat/ Disallow: /index/1 Disallow: /index/3 Disallow: /register Disallow: /index/5 Disallow: /index/7 Disallow: /index/8 Disallow: /index/9 Disallow: /index/sub/ Disallow: /panel/ Disallow: /admin/ Disallow: /informer/ Disallow: /secure/ Disallow: /poll/ Disallow: /search/ Disallow: /abnl/ Disallow: /*_escaped_fragment_= Disallow: /*-*-*-*-987$ Disallow: /shop/order/ Disallow: /shop/printorder/ Disallow: /shop/checkout/ Disallow: /shop/user/ Disallow: /*0-*-0-17$ Disallow: /*-0-0- Sitemap: http://forum.ucoz.com/sitemap.xml Sitemap: http://forum.ucoz.com/sitemap-forum.xml Robots.txt during the quarantine looks as follows: Quote User-agent: * Disallow: / Robots.txt FAQ Informers are not indexed because they display information that ALREADY exists. As a rule this information is already indexed on the corresponding pages. Question: I have accidentally messed up robots.txt. What should I do? Answer: Delete it. The default robots.txt file will be added back automatically (the system checks whether a website has it, and if not – adds back the default file). Question: Is there any use in submitting a website to search engines if the quarantine hasn't been removed yet? Answer: No, your website won't be indexed while in quarantine. Question: Will the robots.txt file be replaced automatically after the quarantine has been removed? Or should I update it manually? Answer: It will be updated automatically. Question: Is it possible to delete the default robots.txt? Answer: You can't delete it, it's a system file, but you can add your own file. However, we don't recommend to do this, as was stated above. During the quarantine it is impossible to upload a custom robots.txt. Question: What should I do to forbid indexing of the following pages? _http://site.ucoz.com/index/0-4 _http://site.ucoz.com/index/0-5 Answer: Add the following lines to the robots.txt file: /index/0-4 /index/0-5 Question: I have forbidden indexing of some links by means of robots.txt but they are still displayed. Why is it so? Answer: By means of robots.txt you can forbid indexing of pages, not links. Question: I want to make some changes in my robots.txt file. How can I do this?

Answer: Download it to your PC, edit it and then upload it back via File Manager or FTP. I'm not active on the forum anymore. Please contact other forum staff.

|

You've got time, but one month later, with or pay raises Have robots.txt disallow?

http://agarioprivate.ucoz.com Google not indexed because you will wait 1 month |

serkangamis, it is not necessary to wait for one month with the new indexing policy. Please see the details at http://forum.ucoz.com/forum/3-20161-1

I'm not active on the forum anymore. Please contact other forum staff.

|

eDy9419, please notice the difference between the robots.txt file and the website's sitemap - http://elliniki-musiki.ucoz.com/sitemap.xml

The robots.txt file will never update itself since it doesn't have to. It only disallows the indexing of several system-based pages. hey i'm joe and i do not work for the company anymore, please contact tech support for help!

sometimes i lurk here |

eDy9419, you don't have to do anything. The way it looks like right now is just perfect.

robots.txt decides what is allowed to be indexed and what isn't. It will never change itself, and it doesn't even have to. sitemap.xml is the sitemap of your website. It contains the links to your entries. The logic behind every robots.txt file is that they are used to disallow indexing from search engines. If a site doesn't have a robots.txt file, each and every page is indexable. uCoz's robots.txt contains:

The sitemap, containing links to the entries of your site, is declared in the robots.txt file, so the search engines can index it. You don't need to perform any action since the sitemap is updated automatically once a few days. You can update the sitemap manually by removing it from the File Manager. The system will recreate the sitemap that contains up to date information. hey i'm joe and i do not work for the company anymore, please contact tech support for help!

sometimes i lurk here |

So... didn't expect this to happen.

Am reviving a website of mine that has been neglected, re-purchased my domain, and am using ucoz because it's awesome! Anyway... I didn't have my old website entirely backed up on my computer. So I've been using the Internet Archive to move pages over to my NEW website. (fairtomidlandfans.com) Well... now the Internet Archive wont let me access its archived pages because of the new robots.txt that ucoz had quarantined my website. I have confirmed my phone number, have a layout, and have already imported my "audio" page with download links... how much longer until my website isn't quarantined anymore? My website was already indexed in google, if you were looking up Fair to Midland and their music, FTMfans showed up at the top of hits (just like any other major fansite, we've even been contacted by SOADfans and given kudos for our work.) Anyway... am I forced to sit and wait until my quarantine is over to access my old pages on the internet archive? Or is there any way for ucoz to edit my robots.txt to allow access to the internet archive... but still keep my website quarantined? Idk, I'm a little frustrated right now and didn't expect any of this to happen. I'd assume that the indexed pages they have archived would stay there... I didn't think anything I'm doing currently with my domain would screw with the internet archive. -_- |

z0mbief3tus, currently your robots.txt file is not blocked and the website isn't quarantined: http://fairtomidlandfans.com/robots.txt

I'm not active on the forum anymore. Please contact other forum staff.

|

Hello. I have my domain and I transferred it to uCoz a couple of weeks ago. My website has existed for at least 6 years, so I just recreated it in the new location. I also confirmed my phone number with uCoz. However, the quarantine is still there. When I tried to remove it last (an hour ago) I got a message "not enough content." Like I said I've had this site for quite a while, and it has just enough content for its purpose. What can I do to remove quarantine? Thanks.

|

petrov, please, provide the URL address of your website. Keep in mind that there needs to be a 7-days gap between the quarantine removal requests.

hey i'm joe and i do not work for the company anymore, please contact tech support for help!

sometimes i lurk here |

| |||

Need help? Contact our support team via

the contact form

or email us at support@ucoz.com.