| Forum moderator: bigblog |

| uCoz Community General Information First Steps with uCoz 301 Redirect for new domain (Google ?!) |

| 301 Redirect for new domain |

I attached a domain name to a ucoz.com subdomain using method 3.

I have clicked : Quote Redirect from the system subdomain to the connected one automatically: A 301 redirect is set up from the default subdomain to the connected domain. When opening the website through the default subdomain, the user will be automatically redirected to this page on the connected domain. We advise you to enable this feature. I have added https to the new domain. Then i went to webmaster tools from Google and i added the new site (domain). The old uCoz sub-domain site now can't be fetched because of robots.txt are not allowing. Result is : most ucoz subdomain pages that were on first page of google now are either gone or on page 2-3-4-5. New domain is not on first 50 pages. Doesn't this robots.txt prohibiting Google to fetch old ucoz sub-domain makes Google not take into consideration the 301 redirect and all of the PAGE RANK that goes with it ? Something must have gone terribly wrong or must i wait more ? Help is needed ! Thank you. Thassos Island Portal :

https://thassos.one Post edited by Urs - Saturday, 2017-06-24, 11:09 AM

|

Urs, after a while, the old uCoz subdomain links will disappear from the search results and the new ones will appear instead. It's a question of time, you can't really do anything about this.

hey i'm joe and i do not work for the company anymore, please contact tech support for help!

sometimes i lurk here |

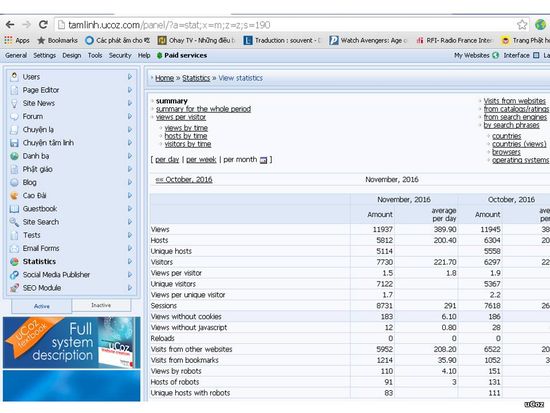

This is a disaster for me when I attach new domain. Google eliminate all my old links. From the website with 10.000 views per day to 10 views per day. I have been waiting 4 or 5 months. Nothing happens.

Be careful to choose the domain at the first time. Post edited by Good - Sunday, 2017-06-25, 1:12 AM

|

Before attaching new domain

Attachments:

2264962.jpg

(106.3 Kb)

|

After attaching new domain 6 months

Attachments:

9158053.jpg

(96.8 Kb)

Post edited by Good - Sunday, 2017-06-25, 1:14 AM

|

Good, this is a common issue and happens with all hosting providers that provide a free systems subdomain. This is one of the reasons we decided to check if a website has a domain name attached to it when attempting to remove the quarantine of the website.

hey i'm joe and i do not work for the company anymore, please contact tech support for help!

sometimes i lurk here |

Urs, after a while, the old uCoz subdomain links will disappear from the search results and the new ones will appear instead. It's a question of time, you can't really do anything about this. I see. The problem i think might still exist is that Google now is no longer allowed to fetch the old site, ... could this make it not see the 301 redirect ? For example the title tag on the new site is the same as old site, despite the fact that i changed it and asked 10 times for a new fetch for the new domain. I can't change the robots.txt in file manager because i would change the new domain also, while the old subdomain robots.txt is hidden ! For old site : https://thassos.ucoz.com/ The robots.txt is : User-agent: * Disallow: / For new site : https://thassos.one/ It is : User-agent: Yandex Disallow: /a/ Disallow: /stat/ Disallow: /index/1 Disallow: /index/2 Disallow: /index/3 Disallow: /index/5 Disallow: /index/7 Disallow: /index/8 Disallow: /index/9 Disallow: /panel/ Disallow: /admin/ Disallow: /secure/ Disallow: /informer/ Disallow: /mchat Disallow: /search Disallow: /shop/order/ Disallow: /abnl/ Disallow: /google Disallow: /twitter Disallow: /facebook Disallow: /yandex Disallow: /vkontakte User-agent: * Allow: /*?page* Disallow: /a/ Disallow: /api/ Disallow: /stat/ Disallow: /index/1 Disallow: /index/2 Disallow: /index/3 Disallow: /index/5 Disallow: /index/7 Disallow: /index/8 Disallow: /index/9 Disallow: /panel/ Disallow: /admin/ Disallow: /secure/ Disallow: /informer/ Disallow: /mchat Disallow: /search Disallow: /shop/order/ Disallow: /abnl/ Disallow: /*?* Disallow: /*_escaped_fragment_= Disallow: /blog/*-987 Disallow: /blog/*-0- Sitemap: https://thassos.one/sitemap.xml Sitemap: https://thassos.one/sitemap-forum.xml Thassos Island Portal :

https://thassos.one Post edited by Urs - Sunday, 2017-06-25, 2:56 PM

|

The problem i think might still exist is that Google now is no longer allowed to fetch the old site, If you want to fetch both domains (thassos.ucoz.com and thassos.one) your website can get banned by Google for duplicated content. Attaching a domain name will always result in having the old uCoz links removed from Google and getting them replaced by the ones from the new domain. It just takes time and that only depends on Google, not uCoz. There's no way and there shouldn't be any way of keeping both versions of the links. hey i'm joe and i do not work for the company anymore, please contact tech support for help!

sometimes i lurk here |

If you want to fetch both domains (thassos.ucoz.com and thassos.one) your website can get banned by Google for duplicated content. Attaching a domain name will always result in having the old uCoz links removed from Google and getting them replaced by the ones from the new domain. It just needs time and that only depends on Google, not uCoz. There's no way and there shouldn't be any way of keeping both versions of the links. I get it now 100%. I will wait. Thank you so much for the advises and information on this matter.

Thassos Island Portal :

https://thassos.one |

Urs, you're welcome and if you need any further assistance, we're always here to help! smile Will Google see and move the page rank for each page to the new domain, considering it is a 301 permanent redirect ? Do you have any experiences from the past with uCoz regarding this matter ? Because some people say : Quote Blocking in robots.txt urls which are also being redirected means the redirection will never be found. Quote Don't use robots to block duplicate content! Often webpages are accessible by a number of different URLs (this is often true in content management systems like Drupal). The temptation is to block the unwanted URLs so that they are not crawled by Google. In fact, the best way to handle multiple URLs is to use a 301 redirect and/or the canonical META tag. Quote Don't combine robots and 301s Most commonly, people realise that Google is crawling webpages it shouldn't, so they block those pages using robots.txt and set up a 301 redirect to the correct pages hoping to kill two birds with one stone (i.e. remove unwanted urls from the index and pass PageRank juice to the correct pages). However, Google will not follow a 301 redirect on a page blocked with robots.txt. This leads to a situation where the blocked pages hang around indefinitely because Google isn't able to follow the 301 redirect. I don't want my high ranking site to go back to page rank ZERO and be moved on page 20+ and lose all my clients. Because this is what is happening right now ! I lost all my positions and google is complaining it can't reach the sub-domain. So please uCoz unblock the robots.txt on thassos.ucoz.com and just apply the permanent redirect 301 ! If i am wrong please correct me. Added (2017-06-26, 10:54 PM) --------------------------------------------- From google : https://support.google.com/webmasters/answer/6033080 Quote Update your robots.txt files: On the source site, remove all robots.txt directives. This allows Googlebot to discover all redirects to the new site and update our index. On the destination site, make sure the robots.txt file allows all crawling. This includes crawling of images, CSS, JavaScript, and other page assets, apart from the URLs you are certain you do not want crawled. Why uCoz doesn't know this and breaks sites ? Do i have to enable this ? Quote Allow default subdomain indexing (by search engines) : This feature allows search engines to index the default subdomain. If a 301 redirect is set up, the default subdomain and the connected domain will be merged together. We advise you to enable this feature. Thassos Island Portal :

https://thassos.one Post edited by Urs - Monday, 2017-06-26, 11:09 PM

|

Urs, enabling default subdomain indexing is a step in the right direction. Give it a week then see if your results have improved.

If they haven't then recheck your robots.txt files and if necessary upload your own to override the existing set. You're correct about blocking pages and the 301 redirect status of them. White hat search engines won't query the URL if it's blocked by robots.txt. Therefore they'll never hit the redirect and update that in their records. Jack of all trades in development, design, strategy.

Working as a Support Engineer. Been here for 13 years and counting. |

Urs, enabling default subdomain indexing is a step in the right direction. Give it a week then see if your results have improved. Now https://thassos.ucoz.com robots looks like this : Code User-agent: Yandex Disallow: /a/ Disallow: /stat/ Disallow: /index/1 Disallow: /index/2 Disallow: /index/3 Disallow: /index/5 Disallow: /index/7 Disallow: /index/8 Disallow: /index/9 Disallow: /panel/ Disallow: /admin/ Disallow: /secure/ Disallow: /informer/ Disallow: /mchat Disallow: /search Disallow: /shop/order/ Disallow: /abnl/ Disallow: /google Disallow: /twitter Disallow: /facebook Disallow: /yandex Disallow: /vkontakte User-agent: * Allow: /*?page* Disallow: /a/ Disallow: /api/ Disallow: /stat/ Disallow: /index/1 Disallow: /index/2 Disallow: /index/3 Disallow: /index/5 Disallow: /index/7 Disallow: /index/8 Disallow: /index/9 Disallow: /panel/ Disallow: /admin/ Disallow: /secure/ Disallow: /informer/ Disallow: /mchat Disallow: /search Disallow: /shop/order/ Disallow: /abnl/ Disallow: /*?* Disallow: /*_escaped_fragment_= Disallow: /blog/*-987 Disallow: /blog/*-0- Sitemap: https://thassos.ucoz.com/sitemap.xml Sitemap: https://thassos.ucoz.com/sitemap-forum.xml I have also made use of "change of address tool" in Search Console (webmaster tools) If they haven't then recheck your robots.txt files and if necessary upload your own to override the existing set. BUT You can only override the robots.txt for https://thassos.one once you attached a domain right ? ( not for https://thassos.ucoz.com - the only option here being the default robots.txt ) The sitemap for https://thassos.ucoz.com is still not updated (i clicked resubmit in Search Console) and it still gives 3,241 warnings errors. (robots.txt -> Sitemap contains urls which are blocked by robots.txt.) But the robots.txt has been updated according to uCoz and i went to robots.txt Tester and clicked ( Ask Google to update -> Submit a request to let Google know your robots.txt file has been updated. ) If you want to fetch both domains (https://thassos.ucoz.com and https://thassos.one) your website can get banned by Google for duplicated content. Attaching a domain name will always result in having the old uCoz links removed from Google and getting them replaced by the ones from the new domain. It just takes time and that only depends on Google, not uCoz. There's no way and there shouldn't be any way of keeping both versions of the links. It seems that Google doesn't like blocking of old domain as it will not see redirect 301. Could you guys make a tutorial step by step so that future people won't ruin their hard worked site like i did because of lack of clarity concerning this issue ? (like always allow enabling default subdomain indexing when you want to transfer to a new domain and keep page rank) Can you change sitemap change frequency from weekly to daily <changefreq>weekly</changefreq> -> <changefreq>daily</changefreq> ? Thassos Island Portal :

https://thassos.one Post edited by Urs - Tuesday, 2017-06-27, 9:59 AM

|

Urs, first of all, when you update the robots.txt file, it will update it for both domains. Or it should, at least. Your robots.txt file is correct because it only blocks system resources.

Secondly, you have mentioned that you sent a request to let Google know your robots.txt file has been updated. This can take up a few days. The 301 redirect works on your website (see: http://take.ms/oNXm4 ), so everything should be good. You only need to be patient! hey i'm joe and i do not work for the company anymore, please contact tech support for help!

sometimes i lurk here |

Can you change sitemap change frequency from weekly to daily <changefreq>weekly</changefreq> -> <changefreq>daily</changefreq> ? Might be a bit too much to do this for all websites on a server. Don't forget that you can update your sitemap manually anytime by removing it in the file manager. hey i'm joe and i do not work for the company anymore, please contact tech support for help!

sometimes i lurk here |

| |||

Need help? Contact our support team via

the contact form

or email us at support@ucoz.com.